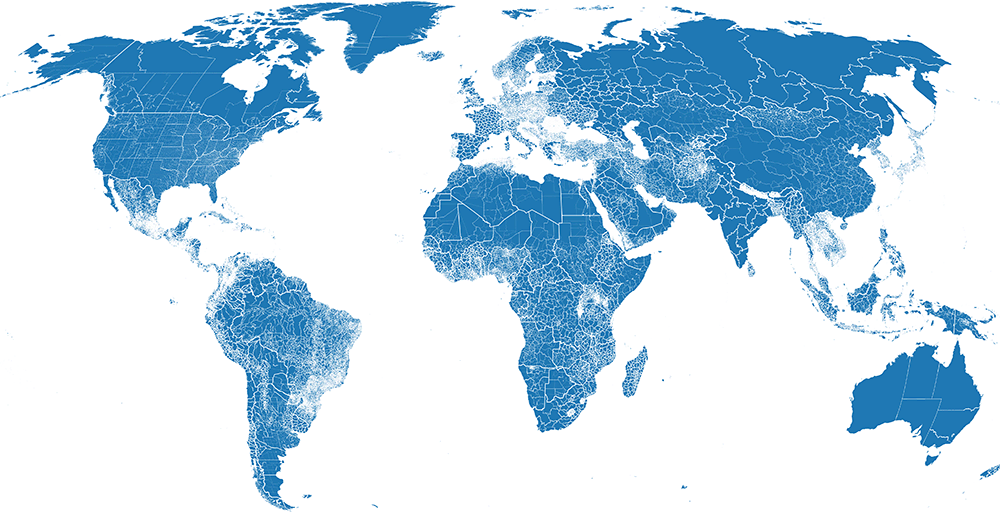

Global Edge-matched Subnational Boundaries

Updated:

Humanitarian

Uses UN Common Operational Datasets (COD) when available, falling back to geoBoundaries for regions without coverage. Represents the latest available data for humanitarian operational use. Uses the OpenStreetMap International ADM0 worldview for edge-matching.

| Level | Geopackage (QGIS) | Geodatabase (ArcGIS) |

|---|

Attribution: FieldMaps, UN CODs, geoBoundaries, U.S. Department of State, OpenStreetMap

License: Open Data Commons Open Database License (ODbL)

Conditions: Derived work must include attributions, be offered under the same license, and keep open access to the data

Open

Uses geoBoundaries exclusively to ensure all data comes from sources with clearly defined licenses. Suitable for academic or commercial use. Uses the OpenStreetMap International ADM0 worldview for edge-matching.

| Level | Geopackage (QGIS) | Geodatabase (ArcGIS) |

|---|

Attribution: FieldMaps, geoBoundaries, U.S. Department of State, OpenStreetMap

License: Open Data Commons Open Database License (ODbL)

Conditions: Derived work must include attributions, be offered under the same license, and keep open access to the data

Download metadata tables as: json | csv | xlsx

See GitHub for technical information on how edge-matching is performed.